is a very real thing.”

“I think the average person has no idea just how much of the content they consume on social media, if it’s not an outright bot, is a human using AI in the loop to generate that content at scale, to manipulate and evade,” he added.

To address the rise of bots, the founders are looking toward new technology, like zero-knowledge proofs (aka zk proofs), a protocol used in cryptography that could be used to prove that someone owns something on a platform. They’re envisioning communities where admins could turn the dials, so to speak, to verify that a poster is human before allowing them to join the conversation.

“The world is going to be flooded with bots, with AI agents,” Rose pointed out, and that could infiltrate communities where people are trying to make genuine human connections. Something like this recently occurred on Reddit, where researchers secretly used AI bots to pose as real people on a forum to test how AI could influence human opinion.

“We are going to live in a world where the vast, vast majority of the content we’re seeing is in … some shape or form, AI-generated, and it is a terrible user experience if the reason you’re coming to a place is for authentic human connection, and it’s not with humans — or it’s with people masquerading as humans,” Ohanian said.

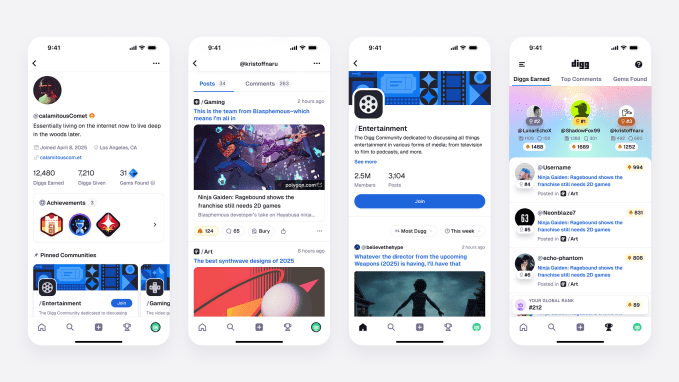

He explained that there are a number of ways that social sites could test to see if someone is a person. For instance, if someone has owned their device for a longer period of time, that could add more weight to their comment, he suggested.

Rose said that the site could also offer different levels of service, based on how likely someone was to be human.

If you signed up with a throw-away email address and used a VPN, for example, then maybe you would only be able to get recommendations or engage in some simpler ways. Or if you were anonymous and typed in a comment too quickly, the site could then ask you to take an extra step to prove your humanity — like verifying your phone number or even charging you a small fee if the number you provided was disposable, Rose said.

“There’s going to be these tiers that we do, based on how you want to engage and interact with the actual network itself,” he confirmed.

However, the founders stressed they’re not anti-AI. They expect to use AI to help in areas like site moderation, including de-escalating situations where someone starts to stir up trouble.

In addition to verifying humans, the founders envision a service where moderators and creators financially benefit from their efforts. “I do believe the days of unpaid moderation by the masses — doing all the heavy lifting to create massive, multi-million-person communities — has to go away. I think these people are putting in their life and soul into these communities, and for them not to be compensated in some way is ridiculous to me. And so we have to figure out a way to bring them along for the ride,” Rose said.

As one example, he pointed to how Reddit trademarked the term “WallStreetBets,” which is the name of a forum created by a Reddit user. Instead, Rose thinks a company should help creators like this who add value to a community, not try to take ownership of their work as Reddit did.

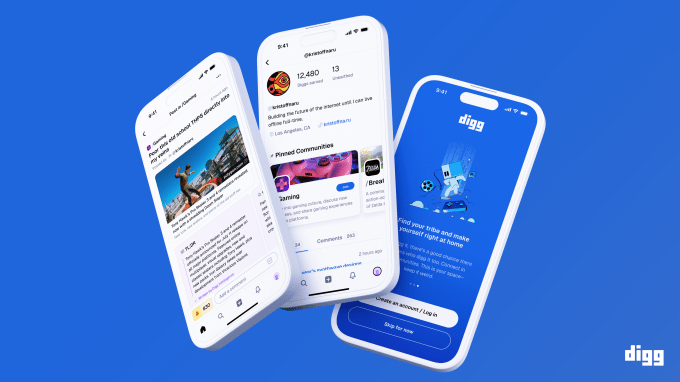

With the combination of improved user experience and a model that empowers creators to monetize their work, the founders think Digg itself will benefit. “I want to believe the business model that will make Digg successful is one that aligns all those stakeholders. And I think it is very, very possible,” Ohanian said.